| Ansible_DEMO | ||

| imgs | ||

| LICENSE | ||

| README.md | ||

whack-a-service [v03]

scope:

the scope of this project is to provide a pre-tested layer of protection for the publication of a service, via an external machine, ideally a cheap vps without any important data onboard.

this approach allows us to have "higly mobile" services since are not directly published to the world.

requisites:

- no sensitive data on the external machines (so NO ssl certificates)

- somewhat redundant

- easy-ish to manage

- almost-"cattle" approach to the external machines

pros:

- decoupling the exit (or entrance) point of a service and it's location

- almost open participation: if someone in your trust circle wants to contribute and manage an external machine

cons:

- managing overhead

- more machines

- latency (greater the path, greater the latency)

- the central power remains whomever manages the DNS

- HSTS might break how haproxy reads the SNI request and redirects without terminating the https protocol

idea:

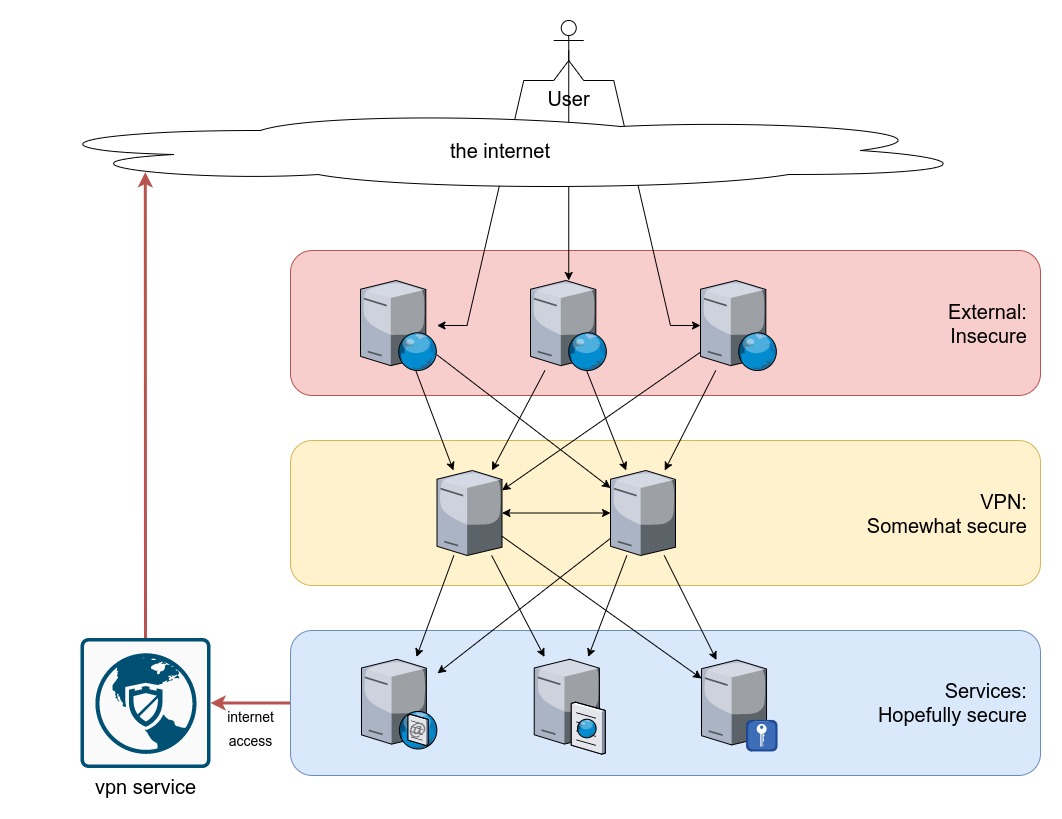

layers:

External:

in this layer we find the external machines, they are configured with:

- haproxy

- bind

- auto updater script to pull the config changes from a git

the dns records of the services are pointed on those machines, see the BIND section for more info on the redundancy approach.

VPN:

this layer provides a layer of anonimity and flexibility between the external machines and the ones with the services onboard.

we made this with a full-mesh network like tinc, which provides a "virtual L2 switch" with alle the machines connected.

the services machines and the external ones connect to these 2 (or more) machines

Services:

in this layer we find the machines that provide the actual services

what we use:

haproxy:

to proxy the connections without terminating the SSL, thus not having any SSL certificate on the external machines, we need to use haproxy to read the SNI and, using ACLs, send the connection to the correct backend.

bind:

bind provides our poor-fella's redundancy to our machines, this is done by hosting a bind instance on every external machine and delegating a zone to each of them, for example: balanced.domain.net

domain hosting configuration example:

balanced 300 IN NS machine01.domain.net.

balanced 300 IN NS machine02.domain.net.

balanced 300 IN NS machine03.domain.net.

machine01 300 IN A 100.100.100.1

machine02 300 IN A 100.100.100.2

machine03 300 IN A 100.100.100.3

every machine's bind has a record for a common host inside that zone that is pointed to the machine itself, for example: publish.balanced.domain.net

bind configuration on machine01 example:

$ORIGIN .

; ---Area 1---

$TTL 300 ; 5min

; ---Area 2---

balanced.domain.net IN SOA machine01.balanced.domain.net. root.balanced.domain.net. (

2021100101 ; serial

300 ; refresh (5 min)

300 ; retry (5 min)

600 ; expire (10 min)

300 ; minimum (5 min)

);

; ---Area 3---

IN NS machine01.balanced.domain.net.

; ---Area 4---

$ORIGIN balanced.domain.net.

;NOTE: machine01 is the server that solves the names

machine01 300 IN A 100.100.100.1

;NOTE: here we can define the content of our zone:

publish 30 IN A 100.100.100.1

balanced.domain.net. 300 IN A 100.100.100.1

in this way when we ask the dns for "publish.balanced.domain.net", we are told to go ask the 3 machines to solve the zone "balanced.domain.net", the first machine that we ask to and is able to solve names is the one that will deliver our service.

there are no primary and secondary servers, in the dns all are equal

example:

- we ask the root servers who has "publish.balanced.domain.net"

- the root servers say that "domain.net" is managed by the main name-servers for our domain (hoster)

- we ask for "publish.balanced.domain.net" at the main name-servers for our domain (hoster)

- the hoster's name-servers answer to that the zone "balanced.domain.net" is solved by:

- "machine01.domain.net"

- "machine02.domain.net"

- "machine03.domain.net"

- we ask for "publish.balanced.domain.net" to machine02 (choosing randomly or at best by lowest latency [0] [1])

- machine02 answers that "balanced.domain.net" is solved by "machine02.balanced.domain.net" (as specified in the zone file)

- machine02 finally answers that "publish.balanced.domain.net" is itself, so 100.100.100.2

file sync:

we want to sync all the configurations on the various servers from a single, maybe shared, point to do that we'll use a git repo where we'll add the configuration, a script called by cron will check every 2min if the repo changes and download new haproxy config files and restart the service.

todo:

- bind: check TTLs and optimize

- bind: better define failover behaviour

- bind: define who-asks-what-to-who in a question-answer way

- hap: example configs

- filesync: example config

- docs: better images with more info

- docs: specify different zones for the external machines to increase redundancy

- docs: list ports required

- create ansible playbook to automate the tasks

- general: check if HSTS might break haproxy SNI redirect

- filesync: add gpg repo signing

- filesync: add verify of commit sign when auto updating config file

- filesync: add configfile check before copying and restarting service

- filesync: add overwrite of local file if differs with the repo's one